The Machine Learning Process

Data Pre-Processing

- Import Data: Load the dataset into your environment.

- Clean the Data: Handle missing values, remove duplicates, and correct inconsistencies.

- Split into Training and Test Sets: Divide the data into training and testing subsets, typically using an 80/20 or 70/30 split.

Modelling

- Build a Model: Select an appropriate machine learning algorithm.

- Train the Model: Fit the model to the training data.

- Make Predictions: Use the trained model to make predictions on the test data.

Evaluation

- Calculate Performance Metrics: Assess the model's performance using metrics such as accuracy, precision, recall, F1 score, etc.

- Make a Verdict: Determine the effectiveness of the model and decide on further steps, such as tuning hyperparameters or trying different algorithms.

Feature Scaling

Feature Scaling is the process of normalizing the range of independent variables or features of data. It's an essential step in data preprocessing, especially when using algorithms that rely on the distance between data points, such as Support Vector Machines (SVM) or k-Nearest Neighbors (k-NN).

Feature scaling is applied column-wise (to individual features) rather than row-wise.

Techniques for Feature Scaling

- Normalization:

- Formula:

X' = (X - X_min) / (X_max - X_min) - This method scales the data to a fixed range, typically [0, 1].

- Use case: When you want all features to have the same scale without distorting differences in the range of values.

- Formula:

- Standardization:

- Formula:

X' = (X - μ) / σ - This method scales data such that it has a mean of 0 and a standard deviation of 1. The values will typically lie within the range of [-3, +3].

- Use case: When you want to center the data and have different features with different ranges.

- Formula:

Example

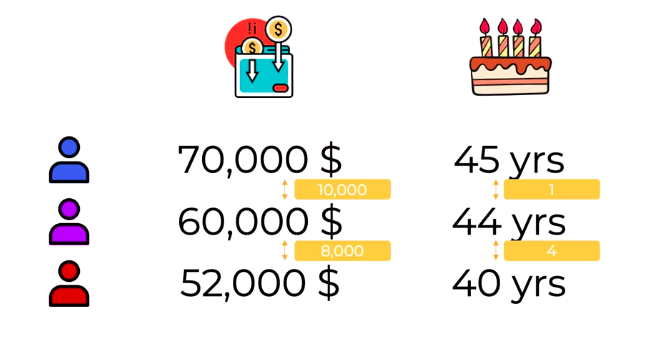

Before applying feature scaling:

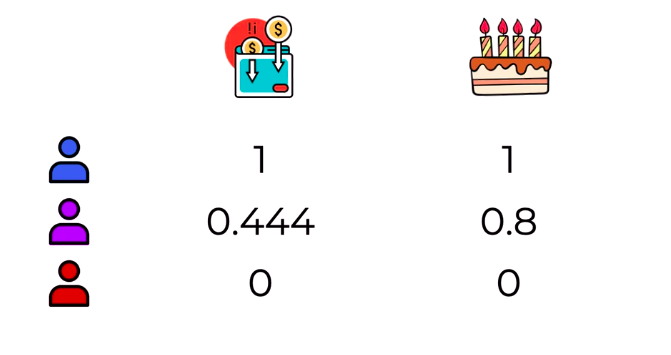

After applying feature scaling: